Web QA Modernization Plan (Example)

An anonymized, high-level roadmap showing how I create a starting point for QA brainstorming, requirements gathering, and delivery planning.

Purpose of This Example

This is a hypothetical, anonymized modernization plan I might use as a starting point when working with a mission-driven digital content organization. The goal is not to prescribe tooling, but to create a shared picture of:

- How web QA work is organized today

- Where audience experience risk actually lives

- What should be tested, why, and in what priority

- How early automation and orchestration can build confidence over time

Objective

Strengthen audience experience signals around web releases, while maintaining an unhurried, sustainable delivery pace.

The organization offers web content that is many people’s first encounter with its broader work and mission. That means broken layouts, navigation issues, or subtle regressions can carry outsized impact on trust and engagement. At the same time, the team wants to avoid an over-engineered QA process that slows everything down.

Key Outcomes

- Clarity on what should be tested, why, and in what priority.

- Reduced manual regression burden, starting with the highest-value flows.

- Improved deploy predictability and audience-facing confidence.

- A lightweight, sustainable QA practice that fits the team’s culture and pace.

Guiding Principles

Thoughtful Early Wins

Start with a small, high-value automated suite and grow deliberately. We automate what matters most to the audience and to the mission, not everything at once.

Value-to-Effort ROI Matrix

To focus automation, I often use a simple value-to-effort lens:

- Value ≈ Audience impact × Frequency × Risk of breakage

- Effort ≈ Complexity of the flow × Testability × Tooling setup

This helps highlight flows that:

- Break often and are painful to test manually

- Are core to the mission (e.g., key content and donation experiences)

- Carry higher reputational or operational risk if they fail

For low-value or rarely-used paths, we often keep testing manual or simplify the experience itself rather than forcing automation onto it.

Phased Modernization Approach

The roadmap is divided into three main phases with an ongoing improvement track. Timelines are illustrative and tuned to the organization’s capacity and cadence.

Phase 1 (0–60 Days): Discovery & Foundations

- Map current web flows and identify “must not break” user journeys.

- Build a simple test-value matrix to rank flows by audience impact, frequency, and risk.

- Clarify what “good” looks like: define basic acceptance criteria for high-priority flows.

- Document the current QA process, environments, and release cadence.

Outcome: A shared view of priority flows, acceptance criteria, and validated journeys ready for initial automation and more consistent manual testing.

Phase 2 (60–120 Days): Automation Scaffolding & Proof

- Select and implement web testing tools (for example, BrowserStack SDK, Playwright, Cypress, or a similar framework) for the initial set of flows.

- Build a small, reliable smoke/regression suite focused on the top-ranked journeys.

- Establish naming conventions, folder structure, and simple reporting standards.

- Ensure tests are understandable by both QA and developers (and, where possible, product owners).

Outcome: A working automated suite that replaces repetitive manual checks on the highest-value flows and increases deploy confidence.

Phase 3 (120–150 Days): Orchestration Layer

- Integrate automated tests into the CI/CD pipeline (e.g., GitHub Actions → test runner → reports).

- Define when tests run (per PR, nightly, pre-release) and the “confidence gates” required for release.

- Ensure test results surface in the same places engineers and QA already live (e.g., pull requests, chat alerts, dashboards).

Outcome: Automated tests become a visible, routine part of the development workflow, not a separate side activity.

Continuous Improvement (Beyond 150 Days)

- Expand coverage slowly, prioritizing flows where incidents or support tickets indicate real pain.

- Formalize response guides and lightweight post-release reviews when issues do escape to production.

- Periodically review the ROI of automated tests and retire low-value ones.

Outcome: A sustainable QA practice with growing coverage, better signals, and steady improvements in trust and predictability.

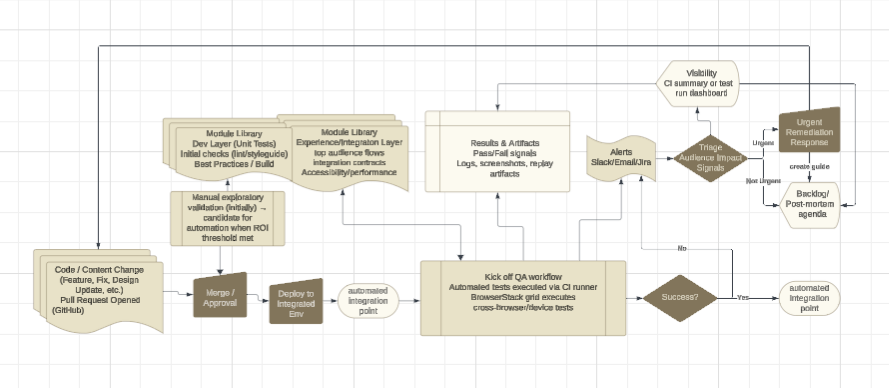

Process & Architecture Overview

Alongside the written roadmap, I typically sketch a simple diagram showing how audience flows, tests, CI/CD, and signals fit together. It gives teams a visual anchor for discussions about where to add checks, how results surface, and where ownership lives.

Early Wins This Pattern Delivers

- Stronger audience experience signals around key web journeys.

- Reduced manual regression load for the most repetitive, risky flows.

- More predictable releases with clear pre-deploy confidence checks.

- A shared language between product, engineering, and QA about “what good looks like” in web quality.

This pattern mirrors modernization work I’ve led elsewhere: we start by clarifying value, then introduce automation and orchestration where they strengthen trust and predictability without overwhelming the team.